Pre-flame disclaimer: No person or company asked me to or paid for me to write or comment about this topic.

Roughly one week ago, Tom’s Hardware published an opinion piece about Nvidia’s upcoming RTX series of graphics cards, titled “Just Buy It: Why Nvidia RTX GPUs Are Worth the Money“.

Shortly thereafter Gamers Nexus published a video titled “Response to Tom’s Hardware’s Insane “Just Buy It” Post [RTX 2080]”, with a corresponding blurb on social media posts saying “Tom’s Hardware’s Editor-in-Chief attempts to string together sentences that have meaning”.

“What? What the fuck is this?” -Steve Burke, Gamers Nexus

In this video, Steve essentially completely laughs off the entire Tom’s Hardware article, and sadly misunderstands a number of points. As a fan of him and Gamers Nexus more broadly, I found this particularly disappointing.

First, let’s pull out a second set of disclaimers. I own a mix of AMD and Nvidia GPUs, but otherwise have no financial investment in either company. I don’t personally have a contact at either company, or Tom’s Hardware, or Gamers Nexus. I’m just some guy.

I haven’t been a regular reader of Tom’s Hardware for close to a decade, and for the record I don’t agree with the “Just Buy It” position – and that’s totally okay because it’s an opinion piece, not an absolute declaration of fact. Earlier this year I became a regular reader / watcher of Gamers Nexus, but I also don’t agree with their assessment of the TH article.

Secondly, the context in which this article is published is incredibly important when digesting the article itself. Prior to Tom’s Hardware publishing this opinion piece, another opinion piece with basically the opposite position was also published on Tom’s Hardware by a different author. This second piece is titled “Why You Shouldn’t Buy Nvidia’s RTX 20-Series Graphics Cards (Yet)“. Right out of the gate, the “Just Buy It” piece already has a counterbalance article from the exact same publication – not to mention just about every other tech publication out there. This juxtaposition is surely intentional, and no doubt accomplishes one of the author’s goals given that he said “the article was written […] in order to strike discussion about the new GPUs“. By contrasting so strongly with existing sentiment, the “Just Buy It” article is better poised to actually shift people into considering a middle-ground mindset about the situation than a middle-ground article itself actually would have had the ability to do.

TH: New technology usually gets cheaper if you wait long enough. So, inevitably, when a game-changing component or device hits the market, many will urge you to stay away until prices drop or a new standard gets wider adoption.

GN: If you wait long enough it’s not new technology anymore! That’s the whole point of waiting and it not being new.

This response from GN comes across as disingenuous. Avram Piltch, the “Just Buy It” author, isn’t trying to claim a new definition for the word “new”, and I don’t think readers reasonably come to the conclusion that he is. Nonetheless, GN spins it as such, instead of putting aside technicality and understanding what was actually meant.

If I were to buy a brand new Ryzen 2700, install it into my system, and then discuss my new CPU in an article or video, nobody would misunderstand me for using the phrase “my new CPU”. I would get zero accusations that I was trying to redefine the term “new”, despite the fact that technically, if I were to list the 2700 on eBay I couldn’t sell this as a “new” CPU – I would have to use the term “used”. After all, once I’ve opened up the box, pulled out the CPU, and installed it into a running system, it’s no longer “new”.

At this stage are any mainstream Ryzen CPUs truly “new” either? They’ve all been out for a number of months already, and likely won’t have publicly available successors until sometime next year. When does recently-released technology cease being new technology?

Understanding what the opening line of the article intends to convey isn’t a challenging linguistic exercise, making me all the more disappointed that Steve either doesn’t understand that intent, or doesn’t care about warping it.

GN is splitting hairs right off of the bat, and while I can appreciate that the video is made in a somewhat light-hearted manner, I still feel this is an unfair way to interpret the TH article, particularly since putting such an interpretation at the start of the video lays a foundation for the audience to get into a mindset of “haha this article is crazy!!11!” instead of calmly and fairly processing the information at hand. In GN’s own words:

“So we’re off to a bad start here.”

TH: The 15-inch Apple Studio display, one of the first flat panel monitors, cost $1,999 when it came out . . . in 1998. Today, you can get a used one on eBay for under $50 or a new 24-inch monitor for under $150, but if you bought one at the time, you had the opportunity to use a fantastic new technology when others didn’t.

GN: What? Like, what’s the argument? Is this argument seriously the thing that YouTube commenters post on our videos? Is the whole argument really just like “FIRST! FIRST! I WAS THE FIRST ONE TO USE IT!” […] “Just Buy It” because you can be “FIRST!” and that therefore makes you better.

I think there are many examples that could be used here that would better demonstrate the point in a way that’s more relatable – particularly to a younger demographic. One such example would be a hypothetical newly released game.

Let’s say that this game costs $59.99 at launch. It’s made by Seasoned Developer who made sure the game was properly polished and tested before releasing it. Games made by Seasoned Developer aren’t typically discounted much until a year or two after release, so there are of course those advocating to not buy the game at release. They say “wait a bit, it’ll be $39.99 before you know it!”. Their point is not entirely without merit, particularly for those with less to spend.

However, you obviously don’t get to play the fucking game if you don’t buy it.1 In much the same way, if you buy a first-gen RTX card, you get to make use of fancy ray tracing and whatever else Nvidia is introducing with the 2000-series.

To further the analogy, you can imagine that instead of a game, we’re talking about an expansion for an existing game, and instead of a hypothetical game, maybe we’ll use an actual title to further drive home the point.

Civilization V (released in 2010) has two major expansions, Gods and Kings (released in 2012), and Brave New World (released in 2013). They’re all fucking expensive, particularly if you are unlucky enough to live in Australia and like video games. As of writing this, Steam is trying to sell Civ V for $69.99 AUD with no DLC, and both expansions are each $49.99 AUD.2

Rewind back to 2012. Brave New World isn’t out yet – there’s just Civ V ($69.99) and a single expansion (Gods and Kings, $49.99). Choosing between buying both ($119.98) or just the base game at this point would be akin to considering a GTX 1080 or 1080 Ti or instead opting for the (more expensive) RTX series. I hear you screaming at me that the RTX series isn’t independently reviewed and we don’t know the performance for sure in real world situations. Please hold that thought for a second because the analogy isn’t complex enough to also fit that in – we’ll get to that later.

Absolutely, you could wait a year or two for bigger sales and discounts to appear before you grab Gods and Kings. Or, you could buy them now, pay more, but have more time to play the game with the new content and enjoy all of it.

Steve, this isn’t about “FIRST!!!1!11!”, this is about sitting down and enjoying a video game – or in this case, prettier looking video games. Both you and I are in the target market here, surely you can appreciate the point here? Whether or not all this new tech is actually significant upgrade in a gamer’s experience isn’t this specific point. The point is that there’s something new, and based on the information released so far it’ll likely make the game better, and if you want it now instead of later, you pay more money for it. It may not have been worded in the best possible way, but at its core it’s not an unreasonable point.

TH: However, what these price-panicked pundits don’t understand is that there’s value in being an early adopter. And there’s a cost to either delaying your purchase or getting an older-generation product so you can save money. Unless and until final benchmark results show otherwise, the new features of the Turing cards make them worth buying, even at their current, sky-high prices.

GN: Let me repeat that, but just sort of truncate the middle. “There’s a cost… to saving money”. That is not how money works! There is one instance where money works that way, and it’s not this one.

GN’s interpretation of the word “cost” here is insulting to anyone with a firm grasp of the English language. Costs can be non-monetary – Avram isn’t trying to say what you’re saying he’s trying to say. For you to say otherwise is simply baffling.

This returns us once more to the previous example with Civ V. Yes, you can opt to save money, and not buy the Gods and Kings expansion. But if you do that, it comes at a cost. A non-monetary cost. If you don’t get the expansion now, you can’t play the expansion now.

Now I’ve (briefly) played Civ V with no expansions. It wasn’t awful. I didn’t love it. I’ve also played (less briefly) Civ V with the Gods and Kings expansion. It’s better. It’s more fun. It’s more enjoyable. If I could advise somebody on whether to buy Civ V by itself or Civ V with Gods and Kings, I would hands down say spend the extra on Gods and Kings.3 Avram is making more or less the same point.

Yes, you can literally save money by buying the not-bad-but-not-as-great product (Civ 5 base game only / Pascal GPU), but there’s a cost.

One final note about this particular point is this part: “Unless and until final benchmark results show otherwise”, which I believe has been edited since the original publication of the article (the quote on the GN video is slightly different from what I see written there). The imperative word here is final. There are Nvidia-released benchmarks already. No, you shouldn’t make specific purchasing decisions based on that data. Yes, it might portray the cards in the best possible light and ignore (by omission) situations where the RTX series is comparatively weak.

That’s not the point being made there.

The point is that it’s not crazy to assume that the benchmark data released by Nvidia is very likely to be somewhat close to the actual performance that’ll show up in reviews. There might (will) be some differences, there might (will) be situations where the RTX series looks to be significantly worse than par value. But with this data you have a sense of the ballpark of performance which at least the RTX 2080 will have.

With this unrefined approximate knowledge of performance, and more concrete knowledge of RTX-exclusive features such as ray tracing, Avram can be translated as saying:

“[Unless the independent benchmarks show notably lower performance than Nvidia’s preliminary released benchmarks], the new features of the Turing cards make them worth buying, even at their current, sky-high prices [because what they offer is worth that price premium (Re: Gods and Kings expansion)]”

Now, you can disagree with his conclusion (as I generally do, at least unless you’re loaded), but to twist the meaning of his words is honestly more ridiculous than said conclusion.

TH: Let’s say you are building a new system or planning a major upgrade and you need to buy a new video card this fall. You could buy the last-generation GTX 1080 Ti for as little as $526, but if you do, you won’t be able to take advantage of key RTX features like real-time ray tracing and great 4K gaming performance until your next upgrade.

GN: First of all 1080 Ti does 4K just fine, thank you very much. So, that is incorrect. Secondly, you can’t take advantage of it anyway because those games don’t exist yet. So sure, some will eventually exist with RTX or ray tracing features or whatever. But most people buying a 1080 Ti are probably aware that it is in fact not a 2080 Ti. […] I don’t really get quite what this means.

For the most part, I agree with GN regarding the 4K gaming performance comment. There is some validity to saying you want max performance possible if you’re going to try to game at 4K (so you can get good frame rates without sacrificing quality settings at such a demanding resolution), but I won’t delve into that too deeply. Either way, it’s worth taking this part with the context of the next, as they’re pretty linked together.

TH: Unless you plan to upgrade your GPU every year, you’re going to be stuck with technology that looks much more outdated in 2019 and 2020 than it does in 2018.

GN: That is.. again, accurate. And also probably 2021, and 2022, and generally as things age they get older.

TH: Yes, there are only 11 announced games that support ray tracing and only 16 that support DLSS (Deep Learning Super Sampling), but there will be a lot more in the months and years ahead. Do you want to put yourself behind the curve?

GN: Again, is this satire? I don’t – I genuinely don’t understand the statement. It sounds like he’s saying “unless you’re going to do this thing that’s crazy, like update your GPU every year, you’ll have outdated technology”.

[With Patrick (GN) now on camera as well]

He’s saying if you bought a 1080 Ti a year ago, you should buy a 2080 because that’ll be the one that’s good for the next 10 years? Yes, except you should also upgrade it next year because you’re upgrading every year.

..Except that’s not actually what he’s saying. This one I can let slide a bit because I agree the way it’s worded is confusing and unclear.

In essence, Avram is saying that the stuff being introduced with RTX (namely real time ray tracing and DLSS) is going to become the norm — or at the very least the “gold standard” to aim for — in upcoming games for the next few years. I’m not saying that he’s correct in this assessment, but that’s the reason the rest of what he’s said here makes sense within context. He’s basically saying that you’ll soon want a GPU that can pull off these tasks the same way that you want a GPU capable of anisotropic filtering (AF), or anti-aliasing (AA).

The implicit comparison is to then imagine if technologies like AF and AA (for some technical reason) didn’t exist until the 1000-series of cards. If you went back in time to the release of Pascal cards, you would only get the ability to use AF and AA if you bought the more expensive 1000-series or future cards – if you went for the value-for-money choice of an older but still well performing 900 series card, you would miss out on these features for an entire upgrade cycle – and on all games during that multi-year time period until your next GPU. He’s saying “buy the one with the landmark new set of features, or you’ll miss out on them for a whole upgrade cycle”. He’s not speaking to existing GTX 1080 Ti owners so much as those looking at the prices of the upcoming RTX series who are then instead gravitating towards the cheaper 1000 series cards. He’s saying that doing so is potentially a mistake even if the pricing looks attractive since AF and AA (read: ray tracing / DLSS) are about to be “the next big thing” for games.

Once again, whether you actually agree with his assessment or not is a different matter entirely and doesn’t inherently make the point moot.

TH: At Nvidia’s conference, CEO Jensen Huang described real-time ray tracing as the “holy grail” of graphical computing. While that statement might be hyperbole, ray tracing is a big deal, because it makes games look and feel much more life-like. The holy grail of gaming is a photorealistic play experience and ray tracing gets us much closer to that goal. It’s not just about light, shadow and reflections; it’s about immersion.

GN: So we’ve established that he’s not self-aware.

C’mon guys. “Might” can be used and commonly is used to mean “[is/does] [essentially]”. A couple of made up examples:

“While playing physical sports might make you more physically fit, playing Star Wars Battlefront II leaves you with a sense of pride and accomplishment.”

“Lead might not be good for human consumption, but it nonetheless remains of some value in a number of other applications, such as the Oddy test.”

We’re not disputing that football is more physically involved than Battlefront II, or that lead isn’t bad for human consumption in these statements.

TH: This week, Nvidia showed a demo of Battlefield V where you can see a muzzle flash from another part of the world reflected in a soldier’s eyes and the fire from an explosion reflected off of the glossy finish of a car. That’s what you’d see if you were actually there and participating in the fight. And you’d also see the world in high resolution, not just 1080p.

GN: […] So, few things here. One: that’s wrong, there’s still visually inaccurate representations in this game, even though it looks pretty good – especially because it’s not fully ray-traced. It’s not literally real. You do know that, right? It’s not actually real life.

Perhaps4 poorly worded, but I don’t believe Avram is trying to say that BF5 literally looks like reality. I’m hoping your (GN’s) comments are tongue-in-cheek.

He’s just saying “it’s more realistic-looking”, which — if we really get down to it — is what basically all advances in game visuals boil down to.

GN: […] Let’s be honest here. Game looks pretty good. But when you’re playing Battlefield, and you see a bad guy in front of you — or even a team mate, it doesn’t matter, it’s Battlefield — you’re probably just going to try and throw C4 on him, or another vehicle and blow it up. Not stare into the characters eyes and think about how beautiful it is.

This part is both true and frustrating at the same time. Isn’t the logical extension of this train of thought to just completely disregard any improvement to visual fidelity? Why bother buying a GTX 1080 Ti and cranking up the visuals to Ultra if you can just get a GTX 1070 and leave them on High? Why not leave them on medium and grab a used GTX 970 for half the price again? Hell, keep whatever you have and just play on low. It’s not like you’re going to actually want Battlefield to look nice.

In this worldview, when does a visual improvement become superfluous instead of welcome? Is there no value to having GPU manufacturers and game developers trying to constantly improve graphics in games?

If you’re saying that “wow it looks so much better / more realistic with ray tracing and stuff” is not a valid reason to spend money on the RTX series, why is that different from the above?

Don’t get me wrong, I myself am a cheapskate when it comes to graphical fidelity. I didn’t have the hardware to play Crysis 1 at high settings, native resolution (1200p), and a good framerate until around a year or so ago. My most played game is League of Legends – not exactly the pinnacle of graphical fidelity.

That doesn’t mean I can’t appreciate games looking nicer though, and it doesn’t mean I want to just not have visual improvements. It also doesn’t mean that I see no value in the RTX features. Whether they are worth that price premium isn’t this specific point and can be debated upon. Whether or not it looks better is this point. And it does.

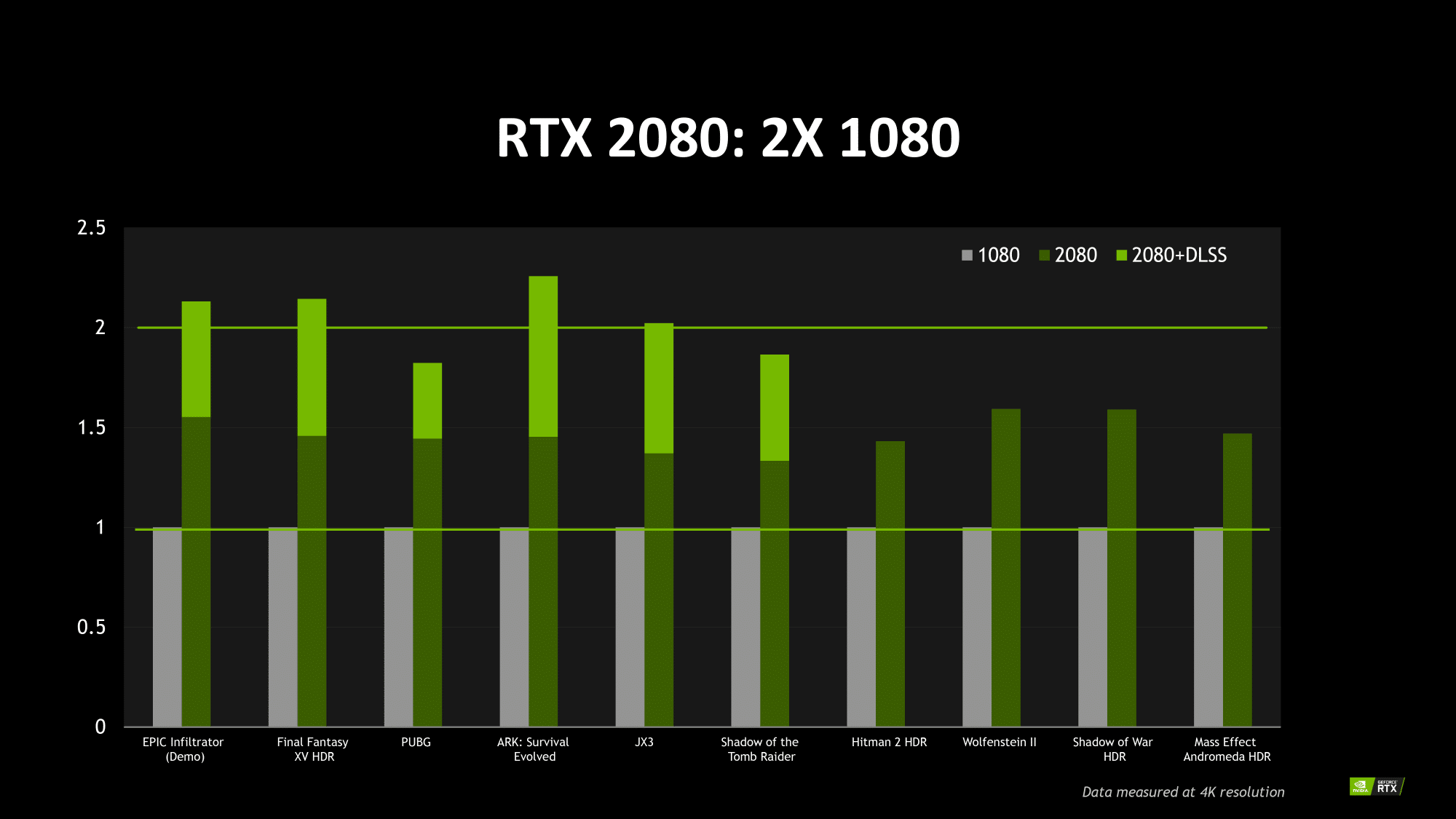

TH: According to Nvidia’s own numbers, the RTX 2080 delivers between 35 and 125 percent better performance on 4K games than the GTX 1080. The percentages were between 40 and 60 percent for games that did not have special optimizations for the new cards. In other words, you should be able to play existing 4K games smoothly that were either unplayable or choppy on 10-series cards.

GN: You know, somewhere between 0 and.. infinity. It’s out there somewhere. It’s either not better or WOAH, IT’S REALLY GOOD!

Dude, fuck off.5 The performance numbers literally are 35-125% for the sampled games at 4K in the data which Nvidia has provided. You have the same graph that Tom’s Hardware does, which is the same one that everyone else has. “35-125%” is a factual statement about that data. You can say “it could’ve been phrased better, such as by saying X instead”, but simply making fun of a presentation of fact is mind boggling. I don’t come to GN for sensationalised bullshit which I can easily get elsewhere.

TH: When you’re among the first to purchase a new architecture like Nvidia’s RTX cards, you take the risk that the technology won’t work as well as advertised right away, that you won’t find a ton of titles that support its special features and that the price will drop, making you feel like you wasted your money. However, when you pay a premium for cutting-edge components, you’re also buying time, time to enjoy experiences.

GN: WHAT!? WHAT DOES THAT MEAN? Buying time to enjoy experiences that by your own sentence preceding this one don’t exist yet. I don’t – what does that..? Like seriously, sit down, read the article, read that paragraph. Here’s a fun audience participation. Everyone post a comment below on your interpretation of this. It’s kind of like when you go to a shrink, and they show you a bunch of ink blots and ask you what it means. That’s what this is, it’s actually a psychological test to see how you are, and if you need help, like this guy might.

Steve, consider this my fun audience participation. Back to the debate though.

For this I’m just going to yet again revisit the Civ 5 example. This is the same thing Avram has said, but with the context changed to be about Gods and Kings instead.

When you’re among the first to purchase a new game or expansion like Gods and Kings, you take the risk that the game mechanics won’t work as well as advertised right away, that you won’t find a ton of other players to play multiplayer with (particularly among your friends) and that the price will drop in the future, making you feel like you wasted your money. However, when you pay a premium for just-released games/expansions, you’re also buying time – time to enjoy those games/expansions that you wouldn’t be able to if you had waited until they were on sale a year or two later.

If you buy it at release, you get to enjoy it at release. If you wait and buy it later, you miss out on being able to spend that extra time playing the game (as with Gods and Kings) / using an RTX card’s additional capabilities. I don’t find the language used in the article to be unintelligible, even if it’s worded a bit like an advertisement or satirical parody.

TH: Life is short. How many months or years do you want to wait to enjoy a new experience? You can sit around twiddling your thumbs and hoping that an RTX 2080 gets cheaper, or you can enter the world of ray-tracing and high-speed, 4K gaming today and never look back. When you die and your whole life flashes before your eyes, how much of it do you want to not have ray tracing?

Okay, yeah this one is over the top – particularly that last part. It’s written in hyperbole intentionally (I hope), but it still just sounds bizarre if you actually read it. It would be better suited to a parody article than a real article. Shoutout to /r/nottheonion and /r/nottheESEX/.

Final Words

There’s a few other points made in the TH article and discussed in the GN video, but I don’t think there’s much more to say about them that isn’t just rehashing the points I’ve already made above.

You can disagree with the Tom’s Hardware article. You can say it’s poorly written at points. You are free to question the validity of the author’s suggestions and conclusions — I’m with you on all three, just as many other people are — but to completely throw this guy under the bus for attempting to present an opinion you don’t agree with is a level of lunacy far greater than the article itself ever reached.

And before anyone asks, I have no intention of buying first gen RTX cards, or recommending that any of my friends do so. You may be amused to know that I actually already said as much to some of my friends before I even knew of either article from Tom’s Hardware or Gamers Nexus. Here’s a couple of things I said:

I’ve seen the footage [of gameplay with RTX features]. It’s not worth [the price they’re asking] @_@

EXPENSIVE HARDWARE LOW PERFORMANCE [WITH RTX FEATURES ENABLED] UNDERWHELMING BENEFIT REEEEEEEE

So I don’t agree with “Just Buy It”, but I also think that Steve (GN) has been unnecessarily harsh. There’s a middleground to be found here where we acknowledge that the RTX series is ungodly expensive, but that the features they bring with them are also neat, and might be worth paying a premium for.

Might.

- We’ll pretend we can’t sail the seven seas for the sake of simplicity, plus we want to support Seasoned Developer because we like their games and want them to have a stable financial future so that they can make more good games.

- Yes you can get them cheaper elsewhere (I did, at least minus Brave New World), but that’s not the point of the analogy.

- (but shop around and look for a good deal in either case)

- re: might; “perhaps” can be used as “Yeah, basically”

- I say this bluntly because I think you’re able to process it without taking it personally. It’s a critique of part of the video, not of you as a person okay love you xoxo.